Content-aware dispatching algorithms

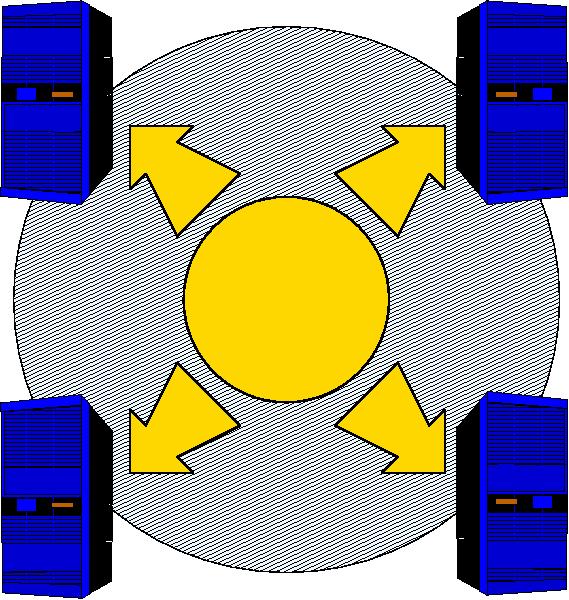

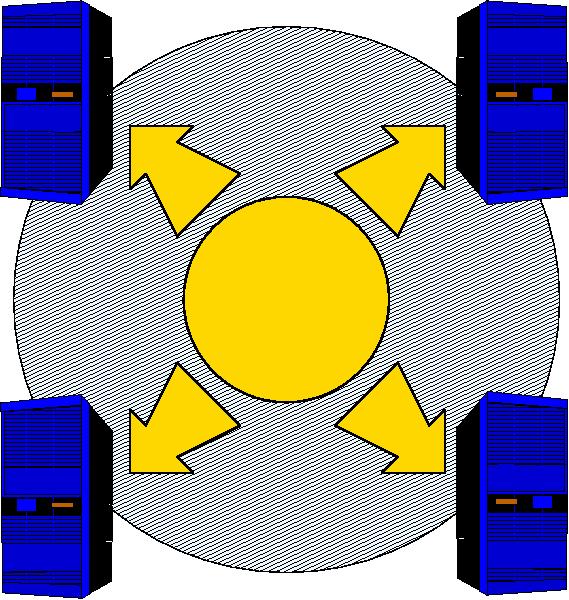

The typical Web cluster architecture consists of replicated back-end

and Web servers, and a network Web switch that routes client requests

among the nodes. We have designed and developed new content-aware

scheduling policies, with an aim at improving load sharing

in Web clusters that provide multiple services such as static, dynamic

and secure information. In particular, we have proposed the

Client Aware Policy (CAP), which classifies the client

requests on the basis of their expected impact on main server

resources, that is, network interface, CPU, disk. At run-time,

CAP schedules client requests reaching the Web cluster with the

goal of sharing all classes of services among the server nodes.

Simulations and experimental results have shown that CAP is able

to outperform locality-aware assignment policies and least-loaded

schedulers. In particular, dispatching policies aiming to improve

locality in server caches give best results for Web publishing

sites providing static information and some simple database

searches. When we consider Web sites providing also dynamic

and secure services, CAP is more effective than state-of-the-art layer-7

Web switch policies.

Multi-tier dispatching algorithms

We have also compared the performance of several combinations

of centralized and distributed dispatching algorithms

working at the first and second layer of a multi-tier Web

cluster. Throughout the experiments, we evaluated the effectiveness

of several performance metrics, such as the number of established

TCP connections, the CPU usage, the disk usage. We have tried to

evaluate different load estimation strategies, such as computing

mean values of load samples, or introducing alarm mechanisms to

exclude overloaded nodes temporarily.

One of the main results of this analysis is the confirmation that

least-loaded assignments do not work well when the load metric is

subject to high variations and tends to become stale. In these cases,

load coefficients should be used to avoid the worst assignment,

not to choose the best one.

QoWS dispatching algorithms

In a world where many users rely on the Web for up-to-date personal

and business information and transactions, it is fundamental to build

Web systems that allow service providers to differentiate user

expectations with multi-class Service Level Agreements (SLAs).

Starting from the basic principles of network QoS, we

analyze how Quality of Web Services (QoWS) principles can be

realized in a Web site hosted on a Web-server cluster.

We propose a methodology to determine a set of

confident Service Level Agreements (SLAs) in a Web cluster for

multiple classes of users and services. Moreover, we implement at

the Web switch level and compare three QoWS-aware policies and

mechanisms that transform a best-effort Web cluster into a

QoWS-enhanced system. Our experimental results show that the policies

lacking some QoS principles can provide acceptable performance for

some load and system conditions, but they do not allow the system

to guarantee the contractual SLA targets for every expected load.

Moreover, we carry out a simulation analysis to explore

alternative architectures and policies for addressing other issues

such as system scalability and more severe SLA constraints.

Target architecture

All current dispatching algorithms are evaluated through a Web cluster

represented by a

Reverse Proxy, implemented through the

Apache Web Server

(version 2.0), using both the

mod_rewrite and the

mod_proxy

modules. The target platform is the Linux

operating system. The proposed architecture allows for a very quick

setup and evaluation of dispatching algorithms on a Web cluster.

The policies which prove more stable and efficient are integrated

in our prototypes.

To see a list of people involved in the HiPerWeb project,

click here.

To see a list of publications related to dispatching

algorithms, click here.

Overview |

Publications |

People |

Prototypes |

http://www.ce.uniroma2.it/hiperweb/algorithms.html

Last updated: December 1, 2002